INTERVIEW MIT ESTELA SUARREZ Die Zukunft der Digitalisierung: Quantenrechner und neuromorphes Computing

Ob für automatische Spracherkennung, selbstfahrende Autos oder medizinische Forschung: Unser Bedarf an Rechenleistung steigt stetig. Die Möglichkeiten zu weiteren Leistungssteigerungen bei Mikrochips sinken jedoch. Wie geht es weiter?

It has been over 50 years since engineer Gordon Moore, the later founder of semiconductor manufacturer Intel, predicted that the capacity of our digital devices would double every two years for the foreseeable future. This was because it was possible to double the number of transistors on a silicon microchip every two years. What this meant was twice the computing power in the same space with just a minimal rise in cost. In the following decades, tech companies did in fact manage to regularly double the computing power of silicon chips, driving rapid technological advancement. But will that continue? Supercomputing expert Estela Suarez explains why Moore’s Law will not be valid forever, though development will continue apace. And why that is important for advances in medicine, in traffic, and in climate predictions.

Estela Suarez, technological progress has been a sure constant according to what is known as “Moore’s Law” since 1965. But now we are hearing that the principle will reach its limits. Why is that?

Physical limitations are the issue: It is impossible to make the structures in the chip, like the circuits, infinitely smaller. At some point, we will reach the size of an atom – and that, at the latest, will be the end.

Would an end to microchip optimisation then also mean an end to increased performance?

An end to miniaturisation means that we’ll have one fewer way to increase performance at our disposal. So, we’ll have to use other strategies. There are other optimisation methods that give us higher computing power and are not directly related to the size of the structures on the microchip. A lot of applications have an issue getting data from the memory to the processor fast enough, for example. They are constantly waiting for data before continuing to process. New memory technologies are being developed to address this. They offer more bandwidth so that data can be accessed more quickly. This increases computing power because more operations can be performed in the same amount of time. But even this strategy will ultimately reach its limit at some point. That’s why researchers and technology companies are always on the lookout for completely new approaches to make future computers ever more powerful.

Why is a further increase in computing power important – can you give examples of applications or technologies that can only be developed and deployed if computing power continues to increase?

In the sciences, we want to use computers to analyse increasingly complex phenomena so that we can draw the right conclusions. These phenomena have to be simulated with ever greater accuracy, which increases our need for higher computing power. Weather and climate models are one example. They are fed data from a variety of different sources and take many interrelated aspects into account: from the chemistry of the atmosphere and ocean dynamics to geography and the vegetation in different regions of the Earth’s surface. All of these and even more diverse but interrelated aspects need to be simulated to accurately predict changes in climate conditions over time. Today, the calculations take anywhere from weeks to months of computing time, even on the most powerful computers. In concrete terms, this means that higher computing power would enable even more accurate predictions, which would in turn help to prevent weather catastrophes and to take more targeted measures to mitigate climate change.

What other areas does this apply to?

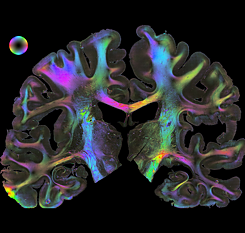

There are similar situations in other areas of research and industry, like medicine: Today’s computers cannot yet create a simulation of an entire human brain, though that could help decisively improve our understanding of neurological diseases. Drug development also requires high computing power to determine the right composition of active ingredients more quickly. Supercomputers are also used to identify the new materials needed to manufacture more environmentally friendly products. The development of language models also requires increasingly powerful computing performance. These are already used in many places in our everyday lives, like in cell phones, cars and smart TVs.

At the Forschungszentrum Jülich you are working on quantum technology, which will offer higher computing speeds for some processes. What possibilities might it open up?

This approach is being pursued intensively worldwide, and not just at research institutions. Large IT companies like Google, who we partner with, are looking into it too. Quantum computers can solve certain problems much faster than standard computers because they perform calculations in a completely different way. They use the properties of quantum physics that allow for more compact data storage and much faster execution of certain operations. Quantum computers are not necessarily better than “standard” digital computers for every application. But they are extremely efficient, especially for optimisation problems, such as finding the minimum value in a multidimensional parameter array. Navigation is a practical example of this kind of problem: When you enter a destination into your GPS system, the computer in your device calculates the best route, taking distance, speed limits, current traffic conditions, and other factors into account. This is a typical optimisation problem that can run much faster on a quantum computer than on a normal computer. Using a quantum computer to calculate a route for one person going on holiday would be excessive. But imagine a future in which millions of cars in autopilot mode have to make their way along the roads of Germany in a coordinated manner to get every passenger to their destination as quickly as possible. This would require huge computing power, which will probably only be feasible with quantum computers.

To date, not a single quantum computer is in use outside of research. How far are we from quantum technology actually becoming usable?

Quantum technology today is at about the same level as normal computers were in the 1940s. There are prototype systems that have shown very promising results, but we are still struggling with unreliability and computational errors, for example. The software environment is also still in its infancy. Many of the practical tools we are accustomed to having for normal computers, like highly optimised compiler software, customised libraries and debuggers, are not yet available for quantum computers. Algorithm development also has a long way to go.

Mobilität der Zukunft? Autonomes Fahren verbraucht enorm viel Rechenleistung.

| Foto (Detail): © Adobe

What other ways or ideas are there for enabling an increase in data processing and how far along are they in their development?

Mobilität der Zukunft? Autonomes Fahren verbraucht enorm viel Rechenleistung.

| Foto (Detail): © Adobe

What other ways or ideas are there for enabling an increase in data processing and how far along are they in their development?

Neuromorphic computing is another innovative technology. This is the concept of building a computer that mimics the way the brain works. Our brain is not very fast at completing mathematical operations, but thanks to the massive networking among neurons, it is extremely efficient at learning and identifying links between various observations. Our brain requires considerably less energy than a conventional computer for tasks such as pattern recognition or language learning. Neuromorphic computers are trying to achieve these kinds of capabilities and apply them to data processing.

What are the current forecasts? How fast do experts think technologies will develop in the coming years and decades? What assumptions are they basing their prognoses on?

The current trend is toward diversity. We assume that progress will not be driven and achievable using a single technical solution in isolation. This is a trend we’ve seen for years in the development of supercomputers, for example. Supercomputers increasingly combine different computing and storage technologies to achieve maximum performance with maximum efficiency. The technological development of the individual components is very dynamic and completely new approaches are emerging. One goal of research in this field is therefore to efficiently interconnect the various components. At the Jülich Supercomputing Centre, we are developing a modular supercomputing architecture in which we are working on bringing together different approaches as modules in supercomputers. We are also working to combine conventional computers with quantum computers.

Der aktuell schnellste Superrechner Europas, JUWELS, am Forschungszentrum in Jülich.

| © Forschungszentrum Jülich / Wilhelm-Peter Schneider

Der aktuell schnellste Superrechner Europas, JUWELS, am Forschungszentrum in Jülich.

| © Forschungszentrum Jülich / Wilhelm-Peter Schneider

0 0 Kommentare