In ancient times, people marveled at oracles, those divine messages that unveiled mysteries and could predict the future. Today, in the digital age, we have something that seems to have come straight out of such legends: artificial intelligence (AI). An invisible entity that can predict the next day’s weather, identify illnesses from an image, and even select the ideal music to match our mood. This almost verges on magic, right? AI could be likened to a modern prophet that aids us in making decisions, from the most trivial to those that couldradically change our lives.

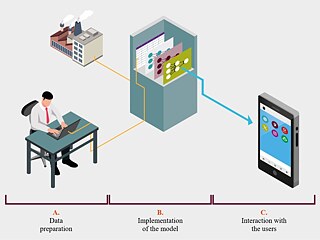

Artificial intelligence astounds us with its ability to perform tasks that normally require human ingenuity, such as creating images or writing poems. Perhaps you have seen photos that have left you speechless, like the one of Pope Frances wearing haute couture or portraits of historical figures in contemporary styles, only to learn that they were generated by a computer algorithm. The ability of artificial intelligence to produce creative content is, in fact, amazing. However, this ability also raises an interesting question: How can algorithms generate such convincing images that they manage to confuse those who see them?To understand how artificial intelligence works, let’s think of it as a three-step process (Diagram 1). First, you need to prepare the data. This means that people select the information that the systems are going to use (Section A). Next comes the training, where the algorithms process and analyze the data to identify patterns. This analysis allows them to develop the ability to generate a response when they receive new information (Section B). The process culminates when those algorithms reach our devices in the form of applications, allowing us to interact with them directly (Section C).

Diagram 1. Generating an Artificial Intelligence Model

BEYOND ALGORITHMS

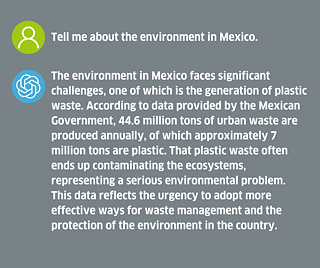

Because of increasingly advanced algorithm designs like ChatGPT, we are experiencing a kind of technological wizardry. These systems truly appear to be oracles of the modern era with astonishing capabilities to compose poetry, analyze images, or respond to a variety of questions. To test these capabilities, we asked ChatGPT to write a paragraph about the environment. This exercise not only highlights its capacity for creating coherent content but also opens a space for reflection: Can we think of ChatGPT as a modern-day oracle?

If we consider the grammatical accuracy, the vocabulary — meticulously selected — and the cohesive ideas, reading this paragraph can lead us to believe that it was written by an expert. However, let’s think about how this paragraph was created and the mechanisms underlying its apparent perfection.

When addressing environmental issues, an expert would undoubtedly ask for details to focus their analysis. In contrast, ChatGPT automatically associates “environment” and “waste” without any consideration of other possibilities. This is because, when the algorithm was trained, it probably used a large volume of texts in which those terms appeared together. So, the system learned to relate them without taking other approaches into account. ChatGPT’s tendency to generate a response without asking for additional information, or taking context into consideration, has led some researchers to call these language systems “stochastic parrots” because they repeat information without any in-depth understanding. Yet, the capability of artificial intelligence to provide answers to almost any question is what evokes the image of ancient oracles since they too tended to give enigmatic, incomprehensible answers.

This analogy between artificial intelligence and oracles highlights a significant challenge for us, the users: We must base our decisions solely on algorithms, similar to how people in the past trusted the oracles. While some experts see algorithms as tools for objective decision-making, others warn about the risks of putting blind trust in technology. The key to addressing this dilemma and, at the same time, determining whether AI can be thought of as a modern oracle is to recognize that the data that feeds these systems can be biased. Algorithms formulate responses based on the information they receive, so their conclusions will inevitably be influenced by those biases. This occurred in the above exercise where, when prompted for a text about the environment, the algorithm was focused on pollution and omitted other possible perspectives.

Although the capability of algorithms to create content is impressive, it is worth recalling that it operates based on the data provided by its developers. Thus, just as the oracles of the past were not infallible and their interpretations required an analysis provided by their consultants, algorithms’ responses must also be evaluated by their users. All this leads us to conclude that artificial intelligence is not an oracle of our era but rather a tool with its own constraints. Although it executes tasks efficiently, it does not understand context and, above all, nuance like a person would. Its usefulness is undeniable, but it is crucial to use it with a critical approach and an awareness of its limitations.

Related Links

02/2024