People versus robots

“Evolution doesn’t care whether we’re happy”.

Are we allowed to use violence against humanoid robots? When the first of them demands civil rights, it will be too late, says neuroethicist Thomas Metzinger. Why humanoid robots create “social hallucinations” in us and what that does to our psyche.

By Moritz Honert

Mr Metzinger, in Japanese hotels, humanoid robots are already being used as concierges, while US companies have recently introduced a new generation of sex dolls that can speak and react to touch. Are robots becoming ever more similar to us?

In areas where humans interact with machines, we will increasingly meet with such humanoid-looking interfaces. Although this is only a small part of robotics, as far as these anthropomorphic interfaces are concerned the technology is now very advanced. In the lab we can measure your head in 20 minutes and create a photorealistic avatar from the data; we can give it view-tracking movements and emotional facial expressions. If we steal audio data from your mobile, we can even make it speak like you. In a video chat, your avatar would be indistinguishable from you.

You’ve concerned yourself for years with the image that man has and projects of himself. As a neuroethicist, do you see problems when our ticket machines soon look like counter clerks?

I’m afraid these humanoid robots will in most cases serve to manipulate consumers. After all, the robot will analyse your facial expressions and begin to understand: Is the customer bored? What do they want to hear?

When our computer goes bonkers, we yell at it

And the machine can exploit that?We have so-called mirror neurons in the brain. When a person smiles at you, you mimic that with your facial muscles. This can be used to influence people. Human-like robots disguise that they are primarily committed to the interests of their programmers, not ours. Just as Google’s algorithm optimizes its hits not for the users but for its advertisers. If in spite of yourself you find a machine so very nice, you may book an upgrade because it smiles so contagiously.

But I know I'm dealing with a robot.

Maybe not. Humanoid robots trigger something within us, what I call “social hallucinations”. We humans have the capacity to imagine we’re dealing with a conscious counterpart even if that’s not so. There are precedents of this: children and primitive peoples often believe in an ensouled nature. When our computer goes bonkers, we yell at it. Other people love their car or miss their cell phone.

Sounds crazy.

Roboy

| © Roboy 2.0 – roboy.org

But it’s evolutionary meaningful. Imagine being in a dark forest at night. There’s rustling in the bushes. The animals that survived were of course those that imagined a predator ten times rather than those that missed it once. Evolution in a sense rewarded paranoia. And that’s why even today we humans still have irrational fears. Evolution doesn’t care whether we’re happy.

Roboy

| © Roboy 2.0 – roboy.org

But it’s evolutionary meaningful. Imagine being in a dark forest at night. There’s rustling in the bushes. The animals that survived were of course those that imagined a predator ten times rather than those that missed it once. Evolution in a sense rewarded paranoia. And that’s why even today we humans still have irrational fears. Evolution doesn’t care whether we’re happy.Nevertheless, some manufacturers deliberately avoid making their robots look like humans. For example, the German “Roboy” looks more like Caspar the Friendly Ghost, with his cute little child’s head. If the robot is too real, many people find it really scary. Researchers call this grey area “uncanny valley”. It’s like a horror movie. When we notice that something is looking at us that’s actually dead, it stirs primitive fears.

The subject of robots has in fact occupied humans for millennia. Even in the Iliad Hephaistos creates automatic servants. How do you explain this fascination?

One motive is surely the desire to play God for a bit. And the fear of death is also an important point. With transhumanism, a new form of religion has now emerged, which dispenses with church and God, yet nevertheless offers the denial of mortality as a core function. Robots and especially avatars suggest the hope that we could defeat death by living on in artificial bodies. For some researchers, it would also be the ultimate of all if they were the first to develop a robot with human intelligence or even consciousness.

According to a study, 90 out of 100 experts expect us to do exactly that by 2070.

I wouldn’t overestimate such forecasts. In the 1960s, people thought that it would only take 20 years before we had machines as smart as humans. Such statements are a good way of raising money for research; by the time they turn out to be wrong, the expert is no longer alive.

The boundaries between man and machine are becoming more fluid.

What is a robot anyway, what makes it different from a machine?I would define it by the degree of autonomy. A machine has to be started and stopped by us. Many robots can do that on their own. And robots generally have more flexibility than, for example, a drill; they can be programmed.

If you define life as organized matter with the capacity to reproduce, wouldn’t robots programmed to build new models be life?

No, because they have no metabolism. But the distinction between natural and artificial systems may not be so easy to draw in future. For example, if we manufacture hardware that is no longer made of metal or silicon chips, but of genetically engineered cells. It’s quite possible that soon there will be systems that are neither artificial nor natural.

TV series like Westworld predict that we will treat the robots like slaves. Would you agree with that?

Maybe. But you have to distinguish clearly between two things. It makes a big difference whether these systems are intelligent, or whether they are actually capable of suffering. Is it even possible to commit violence against Dolores, the android in Westworld? The real ethical problem is that such behaviour would damage our self-model.

How so?

It’s like virtual reality. If criminal acts become consumable in this way, we easily cross the threshold to self-trauma. Man is brutalized. It’s a very different matter, for example, whether violent pornography is viewed two-dimensionally or “immersed” in a three-dimensional and haptic experience. It’s quite possible that disrespectful handling of humanoid robots leads to general antisocial behaviour. Furthermore, humanity has always used the latest technical systems as metaphors of self-description. When the first watches and mechanical figures appeared in 1650, Descartes described the body as a machine. If now we encounter humanoid robots everywhere, the question arises whether we don’t see ourselves too more as genetically determined automatons, as bio-robots. The boundaries between man and machine are becoming more fluid.

“What? A machine? This is my friend!"

With what consequences for everyday life?Imagine growing up in a generation that plays with robots or avatars which are so lifelike that the distinction in the brain between living and dead, reality and dream, is no longer being formed to the degree it still is with us. This doesn’t necessarily mean that these children will automatically have less respect for life. But I can already imagine that in 20 years someone in an ethical debate will pound on the table and say: “Now, slow down, it’s just a machine!” And the answer then is: “What? A machine? This is my friend! Always has been.”

Philosophers like Julian Nida-Rümelin have therefore called for us not to build humanoid robots at all.

For a more nuanced solution, we should ask ourselves whether there are actually any meaningful applications. For example, humanoid care robots could make people with dementia feel like they’re not alone. Of course, you could argue again that this is degrading or patronizing towards the patients.

What limits would you draw?

For me, the key point is not what the robots look like, but whether they have awareness, whether they are capable of suffering. That’s why I’m decidedly opposed in questions of research ethics to the idea of equipping machines with a conscious self-model, a sense of self. I know that this is still far in the future. But once the first robot demands civil rights, it’s too late. If you then pull the plug, it could be murder. I call for a moratorium on synthetic phenomenology.

So in the medium term, we would need something like a global code of ethics for robotics.

Yes. Though it’s perfectly clear that the big players would ignore it, as the US today ignores the International Criminal Court. I find it especially noteworthy that most of the discussions that are already being conducted on the topic today aren’t about ethics, but about legal compliance and liability.

Artificial intelligence forces us to confront ourselves

Because otherwise it can be expensive in case of doubt?Right. There are really a lot of questions. Imagine driving a Google car in a roundabout, plus three more self-driving cars and two normal ones. Then a dog runs onto the street. The cars recognize that there’ll be an accident, and now have to calculate the least damage. What is the life of an animal worth? What is the life of a non-Google customer worth? What if one of the participants is a child or pregnant? Do they count double? And what about Muslim countries where women have limited rights? Is it the number of surviving men that’s important there? What if there are Christians in the other car? That’s the beauty of robotics – we finally have to show our true colours regarding all of these questions. Artificial intelligence forces us to confront ourselves.

The philosopher Éric Sadin has written in the Die Zeit that to assign decision-making power to algorithms is an attack on the human condition.

In fact, it’s a historically unique process that human beings cede autonomy to machines. The deeper problem is that every single step could be rationally, even ethically, imperative. But of course there’s the danger that the development will get out of hand.

With what consequences?

A particular problem is military robotics. Drones will soon be so fast and intelligent that it makes no sense to ask for an officer’s approval in a combat situation. An arms race is starting and sooner or later the systems will have to react to each other independently. We already have this with trading systems on the stock market: in 2010 there was the flash crash, in which billion-dollar losses were incurred within seconds. Who knows what losses a military flash crash would produce.

The horror vision that machines could someday become dangerous is a popular theme of science fiction. In fact, even recognized researchers such as Nick Bostrom of Oxford University now warn of uncontrollable superintelligence.

You can speculate of course whether we’re just the ugly biological stirrup holders for a new level of evolution. But the notion that robots are now starting to build factories on their own and imprisoning us in reservations is utterly absurd. On the other hand, novel problems could in fact arise if an ethical superintelligence, which only wants the best for us, concludes that it would be better for us not to exist because our lives are overwhelmingly painful. But the bigger problem right now is not that evil machines are overrunning us, but that this technology opens new doors for our remote control. If algorithms start to optimize activities on social networks or channels of news begin flowing on their own, then political processes could be manipulated without our noticing it.

In his book "The Rise of Robots", the Silicon Valley developer Martin Ford fears mainly economic consequences.

Yes, there are studies that suggest that 47% of jobs will be lost by 2030. The super-rich and their analysts take this seriously because they see that the introduction of robots will make them even richer and the poor even poorer. And they also see the countries which are now creating the transition to artificial intelligence, and those which are failing to do so and will be definitively left behind in history. In the past, people used to say quite naively: “Whenever in the history of humanity new technologies emerged, they also created new jobs”. This time around that could turn out to be nothing but a pious wish.

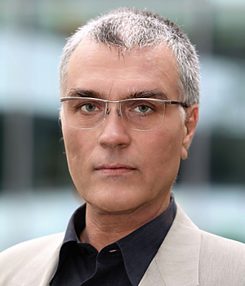

Thomas Metzinger

| © IGU Pressestelle

Thomas Metzinger

Thomas Metzinger

| © IGU Pressestelle

Thomas Metzinger

Thomas Metzinger, 59, received us in an office at the University of Mainz. He is head of the Theoretical Philosophy Department, Director of the MIND Group and the Neuroethics Research Centre. From 2005 to 2007 he was President of the Society for Cognitive Science. His focus is the exploration of human consciousness. Metzinger says that we have neither a self nor a soul, but only a “self-model” in our heads, which we do not experience as a model. In his book Der Ego-Tunnel (Piper) (The Ego Tunnel), he makes this theory intelligible even for laymen. Metzinger advocates linking the philosophy of mind with brain research and has been reflecting on artificial intelligence for more than a quarter of a century. He owns a vacuum cleaner robot, about which he is enthusiastic. He has not named it yet, however

Comments

Comment