Listening out for the detail in AI-driven music

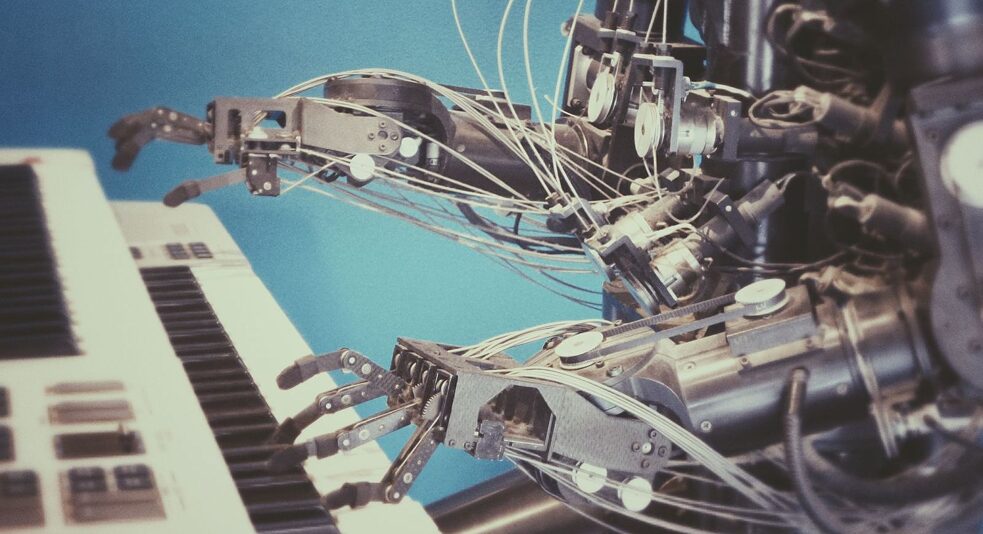

©Lucrezia Carnelos Unsplash

There’s no neat thesis about the role of AI in music, according to researcher and sound artist Oliver Bown. The key is listening closely for the detail and being able to discern the work of the machine from the human involvement.

You are listening to a music composition that somebody tells you has been composed by artificial intelligence and it is unnervingly good. There are rich harmonies that pluck your heart strings and surprising turns that hold your interest. Should you be dumbstruck at how smart AI is becoming? Is this the beginning of the AI takeover? Here are some thoughts to settle your nerves.

Look out for the smoke and mirrors

If you heard something that has even the slightest human involvement in its production, then it becomes very hard to understand what input the AI has had. AI systems can be very good at generating melodic structures that sound coherent, but even a relatively incoherent melody can form the basis for a great piece of music.

James Humberstone from the Sydney Conservatorium of Music makes this point well in a TEDx talk when he gets his audience to randomly pick notes to make a melody. He takes their melody and adds a swathe of music production techniques, including looping the result on endless repeat. The melody starts to take shape, partly because of your habituation to it over time. Adding harmonies and organising melodies into larger structures using repetition can turn something average into something amazing. Needless to say, directly tweaking a note or two can completely turn a melody into something else; even if 90% of the notes have been chosen by an AI system, it can still be the case that it has played almost no role at all in the resulting music.

The cherry-picking of results also undermines our ability to judge whether the AI is doing anything good. It is not hard to flick through 100 one-bar melodies to find something catchy. Even if no person interferes with the outcome of an algorithm, there is a lot of hard-wiring they might do within the algorithm itself that constrains its output to a space of beautiful possibilities. This kind of ‘templating’ can hide extra human input.

This is not to say that there isn’t incredibly sophisticated work in music generation going on. But when it comes to judging its sophistication, we have a very wide tolerance for what we might perceive to be music made by humans. It has actually been decades since the first claims were made of AI-generated music systems fooling human judges.

Some of the most sophisticated work of late has involved generating original music from the waveform up. Even though the system operates at the level of milliseconds, it can make music that has coherent and complex evolutions over minutes. This is truly awe-inspiring stuff. Check out Google’s WaveNet project and OpenAI’s Jukebox project. At their creatively most interesting, these both tend to output dreamy sounding streams of musical conscious which sound alien, and maybe more glaringly machine-generated, but all the more engaging for it.

But even when the hands of humans are all over the final musical product, there is no need to fixate on the spectacle of AI performing talented feats. There is nothing wrong with working with modest AI tools that help you compose music, perhaps by suggesting some almost-good ideas that are unusual enough to break you out of your creative rut.

Australian tech group Uncanny Valley won this year's Eurovision-inspired AI Song Contest

| © Uncanny Valley

Musical artists are not cheating by using the AI, and the AI is not cheating by using them; this is true for the simple reason that making music is not a technical competition! This is after all one of the main ideas for how these systems will be used. This is only a problem if people are being led to believe that no human intervention was involved and that the AI is more remarkable than it is.

Australian tech group Uncanny Valley won this year's Eurovision-inspired AI Song Contest

| © Uncanny Valley

Musical artists are not cheating by using the AI, and the AI is not cheating by using them; this is true for the simple reason that making music is not a technical competition! This is after all one of the main ideas for how these systems will be used. This is only a problem if people are being led to believe that no human intervention was involved and that the AI is more remarkable than it is.

AI systems are not social beings

The most sophisticated AI music systems today “learn” from listening to lots of human music, and at the cutting-edge we are beginning to see amazing competence deriving complex structure as well as abstract musical concepts that are emerging entirely from this analysis of data. Could such a system produce highly original music that was aware of the cultural context in which it was situated? Could it model the qualities of music that stimulate human emotional responses in order to create specific reactions?I would argue that there is no reason, given what we know, why such advances would not be possible, to some plausible degree. I think it would be wrong to argue that just because the system doesn’t “feel” or “understand” the music it created it is no less worthy of being credited as real music.

Computing pioneer Alan Turing proposed that any computer program that is indistinguishable from a human in its responses to an interrogator’s questions needs to be understood as possessing real intelligence, and I believe we can establish this kind of musical intelligence in algorithms.

The automation of music by AI could have negative impacts on age-old systems of cultural interaction

| Photo Credit: Franck V / Unsplash

But there is still a difference between this and what we social beings do when we make music: we often care very much about the backstory and provenance of the music we enjoy. Music is a medium for social interaction and meaning, for marking our place in society. We may care less for AI-generated music because the algorithms that make it are not so embedded in these social concerns, or this technology may become culturally relevant in the way it gets used by other humans.

The automation of music by AI could have negative impacts on age-old systems of cultural interaction

| Photo Credit: Franck V / Unsplash

But there is still a difference between this and what we social beings do when we make music: we often care very much about the backstory and provenance of the music we enjoy. Music is a medium for social interaction and meaning, for marking our place in society. We may care less for AI-generated music because the algorithms that make it are not so embedded in these social concerns, or this technology may become culturally relevant in the way it gets used by other humans.

Some caution about what’s coming

Generative media refers to any kind of “cultural product” that is made by machines, like music, literature, art and film. Music may seem fairly harmless, but the generation of targeted advertising copy or, worse still, political campaign messaging is clearly more concerning stuff. The power to sway entire populations threatens our democracies and social structures, and one could reasonably fear this kind of AI takeover more than killer drones.But having just said that music too is a powerful part of our social bedrock, it also has the capacity to play a role in such technologies of coercion. The delight you take in a symphony or rock ballad is in turn driven by neural pathways with deep evolutionary histories. Your taste is the product of your social context and lived experience, interacting with your developing brain, shaped by millennia of evolution. As such, we are predictable beings, susceptible to cultural influence.

Oliver Bown

| © Oliver Bown

Short of forms of conscious manipulation, it could just “disrupt” in unwelcome ways; the automation of music by AI can have negative impacts for age-old systems of cultural interaction, not least for those people who make a living from creating music. And yet it is also a common story in history to be overly cautious or dismissive of an emerging technology, while a new generation is embracing it, as with photography, synthesisers, drum machines and Photoshop. Their negative impacts come hand in hand with phenomenal new possibilities.

Oliver Bown

| © Oliver Bown

Short of forms of conscious manipulation, it could just “disrupt” in unwelcome ways; the automation of music by AI can have negative impacts for age-old systems of cultural interaction, not least for those people who make a living from creating music. And yet it is also a common story in history to be overly cautious or dismissive of an emerging technology, while a new generation is embracing it, as with photography, synthesisers, drum machines and Photoshop. Their negative impacts come hand in hand with phenomenal new possibilities.These scattered ideas don’t boil down to a single, neat thesis about the use of AI in generating human cultural products, instead suggesting how such technologies may be the bearers of new complexity and diversity in the cultural landscape. Make sure you listen out for this detail.

Learn more about Oliver Bown's views on the future of creative AI here.